Research

Our aim is to develop fundamental theories and effective methods of machine learning, which have various applications in data analysis. Followings are our ongoing topics.

Machine Learning and Information Geometry

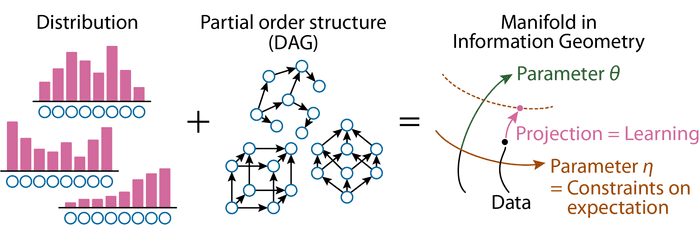

We study the relationship between machine learning and information geometry.

Information geometry enables us to flexibly treat machine learning models in a principled manner. For example, the set of model distributions of a Boltzmann machine, which is a basic generative model in machine learning, can be viewed as a dually-flat manifold, a canonical structure in information geometry.

We are trying to design powerful machine learning models with integrating information geometry and partial order structures, where we can incorporate interactions between variables. Our approach can treat various machine learning tasks such as feature selection, representation learning, and matrix/tensor decomposition.

Selected publications:

- Tensor balancing presented at ICML 2017 [Slide] [Poster]

- Tensor decomposition presented at NeurIPS 2018 [Slide] [Poster]

- Tucker low-rank approximation presented at NeurIPS 2021 [Slide]

Part of this project is supported by JST PRESTO "Social Design" Area and JSPS KAKENHI (Grants-in-Aid for Scientific Research).

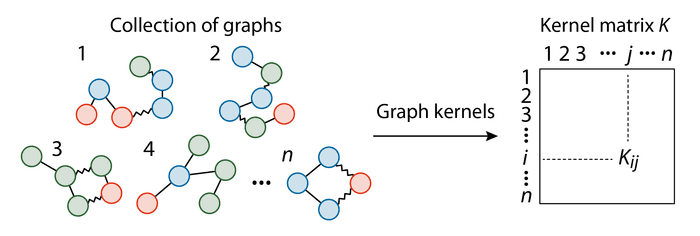

Machine Learning with Discrete Structure

Discrete structures, such as a graph structure, appear in a wide range of applications including bioinformatics and chemoinformatics. Since most of machine learning methods are designed for continuous variables, how to integrate such discreteness into continuous spaces is of high interest. A representative method is a graph kernel, which measures the similarity between graphs and enables us to apply kernel based methods to graph structured objects.

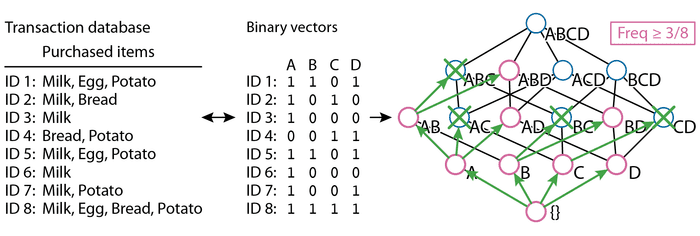

We are also interested in how to learn discrete structures from unstructured objects. One of approaches is to use Formal Concept Analysis (FCA), which extracts a lattice structure using algebraic property of data.

Selected publications:

- Graph kernel library presented at Bioinformatics [Library (R)] [Library (Python)]

- Graph kernel analysis presented at NIPS 2015 [Poster]

- Graph representation learning presented at NeurIPS 2018 R2L Workshop

Part of this project is supported by JSPS KAKENHI (Grants-in-Aid for Scientific Research).

Significant Pattern Mining

Feature selection of detecting informative variables in a high-dimensional data is a fundamental task in machine learning, while most of existing methods can find only single features or linear interactions of features. We study how to find patterns, that is, multiplicative/combinatorial (potentially higher-order) interactions between variables. We use pattern mining algorithms and integrate statistical tests into them to guarantee statistical significance of detected interactions.

This research topic is based on a joint work with Prof. Dr. Karsten Borgwardt's group in ETH Zürich. Please check significant-patterns.org for more details.

Selected publications:

- Significant itemset mining presented at SIGKDD 2015 [Video]

- Significant pattern mining for continuous variables accepted to IJCAI 2019 [Slide] [Poster]

- Review on significant pattern mining (in Japanese)

Part of this project has been supported by JST PRESTO "Big Data" Area and JSPS KAKENHI (Grants-in-Aid for Scientific Research).

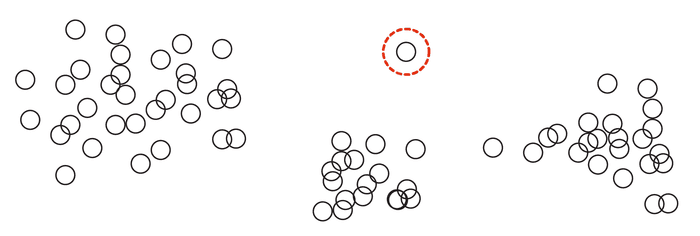

Developing Various Machine Learning Methods

We are also developing efficient and/or effective machine learning methods such as clustering and outlier detection.

Selected publications:

- Efficient outlier detection presented at NIPS 2013 [Poster]